Abstract

In information science related problems (such as document clustering), the objects are generally described with the vector space model. This is especially true for poorly semantically structured documents (for example PDF). This approach doesn’t take other types of information into account (for example metadata or the structure of XML documents). In this article, a tensor space model is presented. It is a simple adaptation of the extended vector model.

Table of Contents

1.2. A Second Example: HTML Documents

1.3. A Third Example: XML Documents

1. Introduction↑

Despite the fact that most documents available today are poorly semantically structured, solutions were developed to overcome this. For years, document management systems add metadata to the documents stored, and several document types propose them in some way (headers of emails, <META> tag in HTML or the “properties” of office documents). More recently, the W3C introduces the XML as a set of technologies to structure documents.

Today, more and more documents in intranets are XML ones, and their number increases on the Internet too. Despite the numerous existing XML databases, there is a lack of tools using the structure to solve several classical problems such as keyword-based queries for information retrieval or clustering of XML documents. Moreover, it is still difficult to manage collections (such as the Internet) where XML documents are mixed with non-XML documents (such as Postscript, PDF, etc.).

1.1. A First Example: emails↑

A simple email is a text document divided into two parts (generally separated by a blank line): the header and the content. The following text proposed a simple example of an email:

From: pascal@source.org (Pascal Francq)

Subject: Example of an email

Article-I.D.: src.17194

Date: Thu, 20 Jul 2006 10:08:50 +0200

Distribution: Paul Otlet Institute

Do you buy the remaster version of the In Rock album from Deep Purple?

Pascal

The header contains several fields such as the author (From), the subject or the date when the email was send. These fields are non only structured metadata that enhances the content of the email, but it provides also information that links emails (for example emails sent by the same author or sharing an identical subject 1) .

1.2. A Second Example: HTML Documents↑

HTML are text documents enhanced with presentation and hyperlink related tags and attributes [1]. The hyperlinks between HTML documents form the Web structure. The following text proposed two simple HTML documents:

<html>

<body>

This is a link to the <a href=”mysite.com/doc2.html”>second document</a>.

</body>

</html>

<html>

<body>

This is a link to the <a href=”mysite.com/doc1.html”>first document</a>.

</body>

</html>

Hyperlinks convey some information. In particular, a hyperlink from a document dm to the document dnmay be seen as if dm recognizes a given authority to dn. This assumption is in fact the base of the famous PageRank ranking method of Google [2].

1.3. A Third Example: XML Documents↑

XML documents are characterized by text-oriented content structured with tags and attributes [3]. The following text proposed a simple example of an XML document:

<?xml version="1.0" standalone="no"?>

<discography xmlns="http://music.org/">

<group groupID="Deep Purple">

<groupName> Deep Purple </groupName>

<album>

<albumName> In Rock </albumName>

<published> 1969 </published>

</album>

</group>

</discography>

A XML structure is a tree containing four different types of tokens:

- tags

- Elements structuring the document.

- attributes

- Elements related to tags and influencing the structure.

- attributes values

- Most attributes are associated with a given value (for example groupID=”Deep Purple”).

- content

- The textual content corresponds to the “knowledge” encapsulated into XML documents.

In a XML document, knowledge expressed textually is associated to tags and attributes that are supposed to add structure information to the document. These structure information of XML documents (the tree of tags, attributes and attributes values) are defined through XML schema.

Every XML schema can be build upon existing XML schema. In the emerging semantic Web [4], the documents depend from application-specific XML schema (for example technical reports) built on top of generic XML schema (for example to represent a person or the abstract of a document). In this context, XML documents with similar tags and attributes (using elements of common XML schema) are supposed to be related to “something common” (from the content or the functional point of view).

For example, in a corpus of XML documents dedicated to clinical tests, similar XML parts (tags and content) may appear in different documents related to the same authors (using tags from the Dublin Core Metadata [5]) or the same chemical molecules (using tags from the Chemical Markup Language [6]).

1.4. Vector Space Model↑

Historically, in information science, the documents were represented as bags of words [7]. Several filtering techniques are used to carefully choose the sequences of characters (tokens) indexing the documents in order to limit the amount of memory needed to represent the documents and to speed up the treatment.

The vector space model was introduced to represent documents in search engines, but it can be used to represent any kind of objects. In this model, the objects (for example documents) are represented as a set of features,

For textual documents, the features are selected terms contained in the documents, and \(e_{i,n}\) is the number of occurrences of these terms. As explained, some filtering techniques can be used, such as replacing the different words by their stems (“connects”, “connected”, etc. are replaced by “connect”) and to remove the stopwords (“and”, “or”, “the”, etc. in English). These techniques are well known [9], and some of them are implemented by the GALILEI platform when it builds a document description.

1.5. Our Approach↑

As the examples shown, new types of information appear in documents. In particular, the metadata, the (hyper)links, and the structure information provided by XML tags should certainly contribute to the representations of documents. Therefore, a major issue in information science is to propose a model to represent structured documents in general, XML documents in particular.

In practice, since most collections do not content structure documents only, a main constraint for the model is to manage documents with different levels of semantic information. Moreover, numerous algorithms, from information retrieval [9] to social browsing [11], were developed for non-structured textual documents. Ideally, the new model representing documents should be “as much compatible as possible” to the previous model in order to take advantage of existing researches in information science.

Previously, extended vector space models were proposed to take new kind of information into account [12, 13]. The GALILEI framework adapts these models for objects (such as XML documents). Specifically, it is suggested that each object is represented by a tensor in a concept space. This adaptation of the classical vector space model has a major advantage. Since there exist several algorithms using the vector space model, the proposed approach makes existing algorithms easily adaptable.

In particular, if a new similarity measure taking the richness if the tensors into account exists (as the one proposed in the GALILEI framework), most information science algorithms (such as the documents clustering, users profiling or users clustering) should work without any changes (and use indirectly richest features if available). Moreover, collections mixing XML documents and non-structured documents can be managed.

2. Tensor Space Model↑

As explained in the previous section, taking richer information into account to represent documents (such as XML ones) is useful. We present here a model where each object is represented by a tensor in a concept space.

2.1. Concept space↑

We suppose that the objects are indexed in a concept space, \(\mathfrak{C}\), formed by concepts, \(c_i\). A concept can be a term, a stopword, a metadata, a text block, an Uniform Resource Identifier (URI), a Digital Object Identifier (DOI), an XML tag or attribute, a language-independent meaningful entity (such as names, cities, or organizations) [14], etc. It should be noticed than some concepts (for example the document content) is itself a set of concepts (such as the terms contained in the document).

Each concept, \(c_i\), has a given concept type, \(\tau_{i}\). A concept type identifies concepts that share a same “worldview”, for example metadata from the Dublin Core Metadata [5], tags and attributes from a same XML schema, French or English stopwords, an identical interpretation of character strings (such as URI ou DOI), etc. Each concept type acts as a namespace for the corresponding concepts, i.e. the character string representation of a concept must be unique in a given concept type. Moreover, we suppose that each concept type defines one neutral concept, “*”, that represents any set of concepts of that type.

Finally, concept types are regrouped into concept categories that are related to the “nature” of the concept (such as textual content). The GALILEI framework defines five concept categories:

- text

- Textual content (stopwords, “normal” words, groups of words, etc.).

- metadata

- Content representing a kind of descriptive metadata (author, abstract, etc.) or technical one (such as specialized expressions from existing taxonomies).

- link

- Content representing (hyper)links (such as URI).

- structure

- Structure information (such as XML tags and attributes).

- predicate

- First-logic predicates formed by concepts triplets.

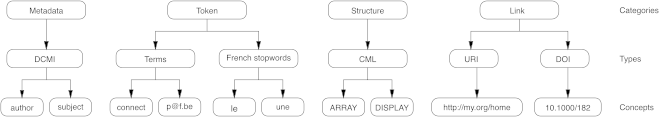

Figure 1 illustrates the hierarchy of concepts.

Figure 1. Concept hierarchy.

The concept types and categories do not influence the concept space, they serve only to organize “semantically” the concepts. The GALILEI framework uses this information, for example, to compute similarity measures. Let us define two functions: \(T(c_{i})\) that returns the type of a given concept, \(c_i\); and \(C(c_{i})\) that returns the category of a given concept, \(c_i\).

2.2. Concept Independence↑

This model shares an hypothesis with the classic vector space model : concepts (such as index terms) are independent. This mean that the presence of a given concept (for example the term “deep”) provides no information on the presence of another concept (for example the term “purple”). This hypothesis is, of course, a simplification: concepts in general, and terms in particular, are dependent. In practice, however, this hypothesis is acceptable.

First of all, taking the dependance into account is a tricky problem: it complicates the model, increases the computational power needed (in particular for a large corpus), and the existing attempts don’t performed better [9].

Secondly, since our tensor space model is statistical (as the classic vector space model), dependencies can be taken into account indirectly through the co-occurrences of the concepts. To illustrate this, let us suppose two domains: genetic algorithms (described with terms such as “genetic”, “algorithm”, “performance”, “chromosome”, “heuristic”, etc.) and genetics (described with terms such as “genetic”, “biology”, “chromosome”, “heredity”, etc.). To describe these domains, the terms “genetic” and “chromosome” are not enough, we must combine them, i.e. they are dependent from other terms.

Yet, it is reasonable to suppose that documents about genetic algorithms will also contained “heuristic” or “performance”, while the terms “biology” and “heredity” will appear in documents about genetics. This means that their descriptions don’t include the terms “genetic” and “chromosome” alone, but will also be defined by other terms in order to make clean distinction between the two domains.

Thirdly, it is possible to create concepts that encapsulates some dependencies. Let us take the previous example. A new concept type, “scientific domain” (text), can be added. Two concepts of that type can be created: “genetic algorithms” and “genetics”. These concepts can then be included in the object representations (such as document).

2.3. Tensor representation↑

In his model, Fox suggests to associate to a given object a (sub)vector for different types of concepts called c-types (authors, bibliographical links, etc.). [15]. If Fox’s model is attractive, it has a little drawback: his c-types are specific to a particular document collection (INEX 2005). Therefore, I propose a generalization of it. In fact, for any document corpus, we may suppose that each object has multiple vectors representing it, each vector being associated to a particular meta-concept (what Fox called a c-type).

Le us imagine that an object (for example a document), \(o_v\), has an “author” metadata which is “Pascal Francq”, and contents the single sentence “The connections are optical fibers”. Moreover, we suppose that we remove the stopwords and take only the stems into account. One possible representation of the object can be built with two elements:

- One metadata meta-concept “author” (of the concept type “DMCI”) defined by the two text terms “Pascal” and “Francq”2 .

- One text meta-concept “*” (of the concept type “Document Content”) defined by the three terms “connect”, “optic” and “fiber”.

Each element may be represented by a vector in the concept space: the first one will have two non-zero elements, the second one having three non-zero elements. As explained above, “author” and “*” are themselves concepts. Therefore, the object can be represented has a tensor, \(\hat{o_{v}}=[o_{ij,v}]\) where \(o_{ij,v}\) represents the weight of the concept \(c_j\) for a vector associated with the meta-concept ci. This model makes two assumptions:

- Multiple elements associated to a common meta-concept (for example two authors) are represented as a single vector.

- Recurrent descriptions are prohibited, i.e. \(o_{ii,v}=0\,\forall i\).

The weights depend on the method used to compute the object descriptions. For example, in the case of a document, the weights of a concept can be the number of its occurrences in the part document represented by the corresponding meta-concept.

Let us suppose that the space contains 8 concepts (types and categories are also given): \(c_0\) represents “author” (“DMCI”, “metadata”) , \(c_1\) represents the neutral concept “*” (“Document Content”, “structure”), \(c_2\) represents the term “Pascal” (“term”, “text”), \(c_3\) represents the term “Francq” (“term”, “text”), \(c_4\) represents the term “coffee” (“term”, “text”), \(c_5\) represents the term “ connect” (“term”, “text”), \(c_6\) represents the term “optic” (“term”, “text”) and \(c_7\) represents the concept “fiber” (“term”, “text”).

The object \(o_v\) is represented by the following tensor:

\begin{align}\hat{o}_{v}&=&\left[\begin{array}{cccccccc} 0 & 0 & 1 & 1 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 1 & 1 & 1\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \end{array}\right]\end{align}

As this example shows, the matrix representing a tensor is highly sparse. This tensor \(\hat{o}_{v}\) can be seen as a set of vectors \(\vec{o}_{i,v}\), each vector being associated to a particular meta-concept, \(c_i\). As for the concepts, we can define two functions: \(T(\vec{o}_{i,v})\) that returns the type of the meta-concept, \(c_i\), associated to the vector; and \(C(\vec{o}_{i,v})\) that returns the category of that meta-concept.

If we define only one meta-concept (for example \(\phi_{\mathrm{text\,block}}\)), the tensor space model is identical to the classical vector space model. The tensor space model can therefore be qualified as an extension of the vector space model if the method used to compute the document descriptions can extract multiple meta-concepts.

3. Inverse Object Frequency↑

3.1. Feature Space↑

In the classical vector space model, the inverse frequency factor, if, plays an important role in the weighting of the terms in the vector (document representation). The inverse frequency factor, \(if_i\), for a feature \(f_i\) is defined by:

\begin{align}if_{i}&=&\log\frac{|\mathcal{D}|}{|\mathcal{D}_{i}|}\end{align}

where \(|\mathcal{D}|\) is the total number of documents, and \(|\mathcal{D}_{i}|\) the number of those containing the feature \(f_i\).

For a given number of documents, \(if_i\) increases when \(|\mathcal{D}_{i}|\) decreases: the discriminant value of a given feature decreases when it occurs often in the corpus. In fact, its value is null if it appears in each document of the corpus.

This factor can be generalized to any kind objects (documents, profiles, etc.) that are described with terms [8]:

\begin{align}if_{i}(\mathcal{O})&=&\log\frac{|\mathcal{O}|}{|\mathcal{O}_{i}|}\end{align}

where \(|\mathcal{O}|\) is the total number of objects, and \(|O_{i}|\) the number of those containing the feature \(f_i\).

3.2. Concept Space↑

The inverse frequency factor could be extended to the concept space. After all, “concept” is just another name for “feature”. But a concept “is more” than a feature: it has a type.

Let us suppose, for example, a subset, \(\mathcal{O}’\), of all objects of \(\mathcal{O}\) that contains some email addresses (considered as a particular concept type). Moreover, let us suppose that a given email address, \(c_i\), is always present in these objects. Its \(if_{i}(\mathcal{O})\) factor is computed by:

\[if_{i}(\mathcal{O})=\log\frac{|\mathcal{O}|}{|\mathcal{O}_{i}|}=\log\frac{|\mathcal{O}\mathcal{\setminus O}’|+|\mathcal{O}’|}{|\mathcal{O}’|}\]

where \(\mathcal{O}\mathcal{\setminus O}’\) represents the set of objects which don’t contain any email address.

In practice, since \(c_i\) is contained in each object providing some email addresses, its discriminant value should be null. But, due to the quantity \(|\mathcal{O}\mathcal{\setminus O}’|\), it is not the case. Therefore, a modification is proposed to compute the inverse object frequency factor, \(if_{i}(\mathcal{O})\), for concept, \(c_i\):

\[iof_{i}(\mathcal{O})=\log\frac{|\mathcal{O}_{T(c_{i})}|}{|\mathcal{O}_{T(c_{i}),i}|}\]

where \(|\mathcal{O}_{T(c_{i})}|\) the total number of objects described by at least one concept of the same type as \(c_i\), and \(|\mathcal{O}_{T(c_{i}),i}|\) the number of those containing the concept \(c_i\).

When applying this to compute the inverse object frequency factor in the case of the email address just presented, its value is now null since \(|\mathcal{O}_{T(c_{i})}|=|\mathcal{O}_{T(c_{i}),i}|=|\mathcal{O}’|\).

4. Concept Weighting↑

The document descriptions computes tensors which associate to each meta-concept/concept pair, \((c_{i},c_{j})\)3 , extracted from a document, \(d_m\), a quantity, \(o_{ij,m}\), that reflects its importance (for example, the number of occurrences of a term in a particular metadata of the document). Before using these tensors in further tasks, such as computing a document similarity or a profile description, it is necessary to adapt these weights in order to take the whole document corpus into account.

Several methods adapt these weights [9]. One of the most used in information retrieval is based on the term frequency (\(tf\)) and inverse document frequency (\(idf\)) factors [7, 10]:

\[o_{i,n}=tf\cdot idf=\frac{e_{i,n}}{\max_{l}\,e_{l,n}}\cdot\log\frac{|\mathcal{D}|}{|\mathcal{D}_{i}|}\]

where \(o_{i,n}\) represents the weights of an element in the vector, \(|\mathcal{D}\) the total number of documents, \(|\mathcal{D}_{i}|\) the number of those containing feature \(f_i\) and \(e_{i,n}\) is the occurrence of the feature \(f_i\) for the document \(d_n\).

These factors have an interpretation: the \(tf\) is a sort of document length normalization while the \(idf\) infers the importance of a particular feature regarding its distribution in the corpus (a feature appearing very often in the documents of the corpus has a less importance).

In the previous section, I explained how the inverse object frequency factor has to be adapted to a concept space where the concepts are structured in types. Similar reasoning can be done with the term frequency factor where the normalization of a particular weight is done regarding the corresponding vector (and not the whole tensor).

It is therefore possible to propose a concept weighting method for the tensor space model that transform a local weight \(o_{ij,n}\) (such as the occurrence of a term in a given part of a document):

\begin{align}W_{\mathcal{O}}(\hat{o}_{v})=&\begin{cases} 0 & i=j\\ \frac{o_{ij,v}}{\max_{l\mid l\neq i}\left|o_{il,v}\right|}\cdot\log\frac{|\mathcal{O}_{T(c_{j})}|}{|\mathcal{O}_{T(c_{j}),j}|} & i\neq j \end{cases}\end{align}

where \(W_{\mathcal{O}}(\hat{o}_{v})\) is also a tensor, and \(\left|o_{il,v}\right|\) represents the absolute value of \(o_{il,v}\) and its uses is necessary because, for some objects (such as profiles), the weights may be negative.

This formula can be adapted to any subset of objects, \(\mathcal{S}\):

\begin{align}W_{\mathcal{S}}(\hat{o}_{v})=&\begin{cases} 0 & i=j\\ \frac{o_{ij,v}}{\max_{l\mid l\neq i}|o_{il,v}|}\cdot\log\frac{|\mathcal{S}{}_{T(c_{j})}|}{|\mathcal{S}{}_{T(c_{j}),j}|} & i\neq j \end{cases}\end{align}

where \(W_{\mathcal{S}}(\hat{o}_{v})\) is also a tensor, and \(\left|\mathcal{S}_{T(c_{i})}\right|\) is the total number of objects of the subset described by at least one concept of the same type as \(c_i\), and \(|\mathcal{S}{}_{T(c_{i}),i}|\) the number of those containing the concept \(c_i\).

This latest formula allows to act as a magnifying glass that focuses on a particular subset of objects (for example all the documents assessed by a user with a given profile).

5. Tensor Operators↑

The classical vector space model has two nice properties:

- The sum of the vectors describing two documents, \(d_1\) and \(d_2\), equals the vector build from a document obtained by concatenating \(d_1\) and \(d_2\);

- The multiplication of a vector describing a document, \(d_1\), by a number, \(x\), equals the vector build from a document obtained by concatenating \(x\) times \(d_1\).

These properties are respected by the tensor space model proposed. To show that, let us suppose the following two tensors :

\begin{align}\hat{o}_{1}=\left[\begin{array}{ccccc} 0 & 0 & 2 & 1 & 3\\ 0 & 0 & 1 & 3 & 2\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 \end{array}\right]&&\hat{o}_{2}=\left[\begin{array}{ccccc} 0 & 0 & 1 & 2 & 0\\ 0 & 0 & 2 & 0 & 3\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 \end{array}\right]\end{align}

where \(c_1\) and \(c_2\) represent metadata concepts (such as “author”) and the other concepts correspond to terms.

The sum of the two tensors respects the first property:

\begin{align}\hat{o}_{1}+\hat{o}_{1}&=&\left[\begin{array}{ccccc} 0 & 0 & 3 & 3 & 3\\ 0 & 0 & 3 & 3 & 5\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 \end{array}\right]\end{align}

The multiplication of the tensor \(\hat{o}_{1}\) by \(3\) respects the second property:

\begin{align}3\times\hat{o}_{1}&=&\left[\begin{array}{ccccc} 0 & 0 & 6 & 3 & 9\\ 0 & 0 & 3 & 9 & 6\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 \end{array}\right]\end{align}

Therefore, every method developed for the vector space model that combines vectors with the sum and scalar multiplication operators works unchanged for the tensor space model proposed.

References↑

[1] World Wide Web Consortium, HTML 4.01 Specification, 1999.

[2] Sergey Brin & Lawrence Page, “The Anatomy of a Large-Scale Hypertextual Web Search Engine”, Computer Networks and ISDN Systems, 30(1), pp. 107—135, 1998.

[3] World Wide Web Consortium, eXtensible Stylesheet Language (XSL) Version 1.0, 2000.

[4] Tim Berners-Lee, James Hendler & Ora Lassila, “The Semantic Web”, Scientific American, 284(5), pp. 28—37, 2001.

[5] Dublin Core Metadata Initiative, Dublin Core Metadata Element Set, Version 1.1: Reference Description, 1999.

[6] Peter Murray-Rust & Henry S. Rzepa, “CML: Evolution and Design”, Journal of Cheminformatics, 3(1), pp. 1—15, 2011.

[7] Gerard Salton, Automatic Information Organization and Retrieval, McGraw-Hill, 1968.

[8] Pascal Francq, Collaborative and Structured Search: An Integrated Approach for Sharing Documents Among Users, PhD Thesis, Université libre de Bruxelles, 2003.

[9] Ricardo Baeza-Yates & Berthier Ribeiro-Neto, Modern Information Retrieval: The Concepts and Technology behind Search, Addison-Wesley, 2011.

[10] Gerard Salton, The SMART Retrieval System — Experiments in Automatic Document Processing, Prentice Hall, 1971.

[11] Pascal Francq, “The GALILEI Platform: Social Browsing to Build Communities of Interests and Share Relevant Information and Expertise” in Open Source for Knowledge and Learning Management: Strategies Beyond Tools, Miltiadis D. Lytras & Ambjorn Naeve (ed.), Idea Group Publishing, 2007.

[12] Gerald Salton, Edward A. Fox & Harry Wu, “Extended Boolean Information Retrieval”, Communications of the ACM, 26(11), pp. 1022—1036, 1983.

[13] Carolyn J. Crouch, Samer Apte, Harsh Bapat, “Using the Extended Vector Model for XML Retrieval”, In Proceedings of the 1st Workshop of the Initiative for the Evaluation of XML Retrieval (INEX), pp. 95—98, 2002.

[14] Nancy A. Chinchor, “Overview of MUC-7/MET-2”, In Proceedings of the Seventh Message Understanding Conference (MUC-7), Vol. 1, 1998.

[15] Edward A. Fox, Extending the Boolean and Vector Space Models of Information Retrieval with P-norm Queries and Multiple Concept Types, PhD Thesis, Cornell University, 1983.